Congratulations to C. M. Downey, who received a prestigious and highly competitive Presidential Dissertation Fellowship! Downey’s dissertation work follows from a wider research goal of improving Natural Language Processing (NLP) tools for under-resourced languages (i.e., those which lack abundant text data).

Modern NLP heavily relies on huge neural networks trained for very high-resource languages like English and Chinese. Such techniques are inapplicable to the majority of the world’s languages—including minority, endangered, and Indigenous languages—which lack the requisite large text datasets. The methodological gap in machine learning between high- and low-resource domains undermines the potentially vital role these systems can play in creating tools such as assisted completion and keyboard auto-correct features, automatic speech recognition, and machine translation services. Development of such tools helps ensure that minority and endangered languages can thrive in the digital era.

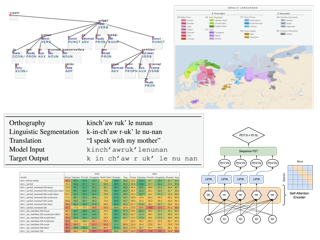

Downey’s dissertation introduces several novel methods to adapt pre-trained multilingual language models for under-resourced languages, including research conducted as part of their general papers. With the assistance of the Presidential Dissertation Fellowship, they will complete the research project to become the final chapter of the dissertation. Large mainstream multilingual models (such as XLM-R, mBART, and BLOOM) typically prioritize including as wide a range of world languages as possible with the goal of being “cross-lingual.” Downey proposes adapting these models for narrower, linguistically informed groupings of languages to address the so-called “curse of multilinguality” — the well-established finding that multilingual models which cover many languages typically perform worse at end-tasks than those which cover fewer. Instead of keeping a model “cross-lingual”, or attempting to specialize it for a single language, the work will investigate adaptation techniques for under-resourced language groups such as the Uralic family, Bantu branch, or Caucasus linguistic region. It is Downey's hope that the pooling of data resources between related under-resourced languages will help to bridge the gap in computational success between these languages and mainstream NLP.